Blink: Data to AI/ML Production Pipeline Code in Just a Few Clicks

You have the data and now want to build a really really good AI/ML model and deliver to production. There are three options available today:

- Write the code yourself in a Jupyter notebook/R Studio etc., for training/validation and dev-ops model handoff. You decided to do the feature engineering also.

- Build your own features like above, however, use Automatic Machine Learning. In production find a way to recreate those features again and then do the scoring with the AutoML scoring code.

- Have a tool to discover the features, do the Automatic Machine Learning and write the code for the entire pipeline for you.

In this blog post, I want to highlight the #3 option — H2O Driverless AI is helping data scientists be a lot more productive and spend time on the business use case and high-level tasks, rather than doing time-consuming feature discovery and model tuning.

Why is Automatic Feature Engineering so important?

Manually created features are often domain knowledge-based or when a data scientist stumbles into it through 100s of iterations. Automatic Feature Engineering allows a tool like H2O Driverless AI to validate 10s to 10s of 1000s of features in conjunction with model tuning, so they are useful. Domain knowledge-based features never go away but work in conjunction with discovered features. Cross-validation is done in the tool, to avoid overfitting in the final pipeline. See the list of feature transformers that Driverless AI will use to create features from data.

http://docs.h2o.ai/driverless-ai/latest-stable/docs/userguide/transformations.html

Data Set

I just randomly picked this data set from my favorite data set website, UCI ML repository:

https://archive.ics.uci.edu/ml/datasets/Diabetes+130-US+hospitals+for+years+1999-2008

Citation: Beata Strack, Jonathan P. DeShazo, Chris Gennings, Juan L. Olmo, Sebastian Ventura, Krzysztof J. Cios, and John N. Clore, “Impact of HbA1c Measurement on Hospital Readmission Rates: Analysis of 70,000 Clinical Database Patient Records,” BioMed Research International, vol. 2014, Article ID 781670, 11 pages, 2014.

The dataset represents 10 years (1999–2008) of clinical care at 130 US hospitals and integrated delivery networks. It includes over 50 features representing patient and hospital outcomes. Information was extracted from the database for encounters that satisfied the following criteria.

- It is an inpatient encounter (a hospital admission).

- It is a diabetic encounter, that is, one during which any kind of diabetes was entered into the system as a diagnosis.

- The length of stay was at least 1 day and at most 14 days.

- Laboratory tests were performed during the encounter.

- Medications were administered during the encounter.

The data contains attributes such as patient number, race, gender, age, admission type, time in hospital, medical specialty of admitting physician, number of lab test performed, HbA1c test result, diagnosis, number of medication, diabetic medications, number of outpatient, inpatient, and emergency visits in the year before the hospitalization, etc.

The data has 102K rows and 50 columns. So what should we build the model for? Well, that depends on what you want to predict in the future, given the same columns, but different data. I picked the ‘readmitted’ column that has three values — NO, ‘30>’ or ‘30<’. You can build a model technically with any target column, but it’s only meaningful if it solves a business problem or question that is of value.

Building an AI/ML Model

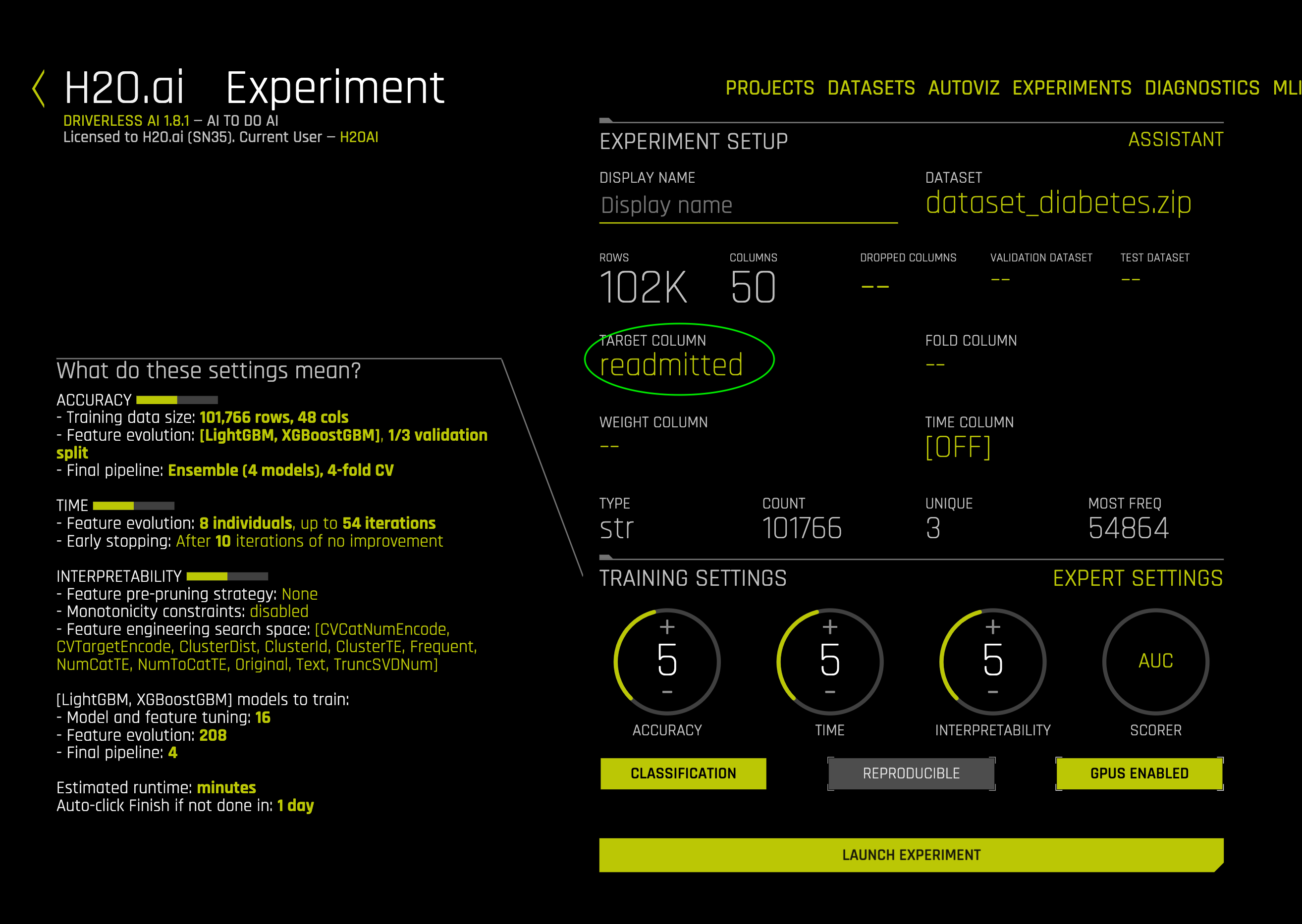

I dragged and dropped the dataset_diabetes.csv.zip into Driverless AI and then clicked Predict . I picked the ‘readmitted’ column as Target variable and clicked the LAUNCH EXPERIMENT button with Defaults.

In literally less than 5 min, Driverless AI had already created 22 models on 1,476 features and you can also see the micro-averaged confusion matrix values on the validation dataset (as it does cross-validation internally). Those 1,476 features consist of the original 50 features and the rest automatically generated by Driverless AI, using an evolutionary technique.

If we look at the VARIABLE IMPORTANCE section, you can see how the Automatic Feature Engineering is building composite features and does feature transformations such as TruncatedSVD (PCA), Cluster Target Encoding, Numeric to Categorical Target Encoding, Cluster Distance Transformations, etc., Here’s the list of all the transformations , that’s built into Driverless AI. You can add even more features to the experiment from a custom recipe GitHub Link .

After roughly 15 min, the experiment finished with 0.792 AUC by evolving both the algorithm hyperparameters (tuning) + engineered features.

You can download the Experiment Report (that was auto-generated by the Driverless AI) from the UI. I have a copy here on my GitHub repo. This word doc is also known as AutoDoc that has everything you need to know about the model that AI built for you!

Dude, AI wrote my Production Code in full

Well, we have the model documentation, what about production code? Should I now write code to generate all the features again and we can run the final algorithm model?

N O

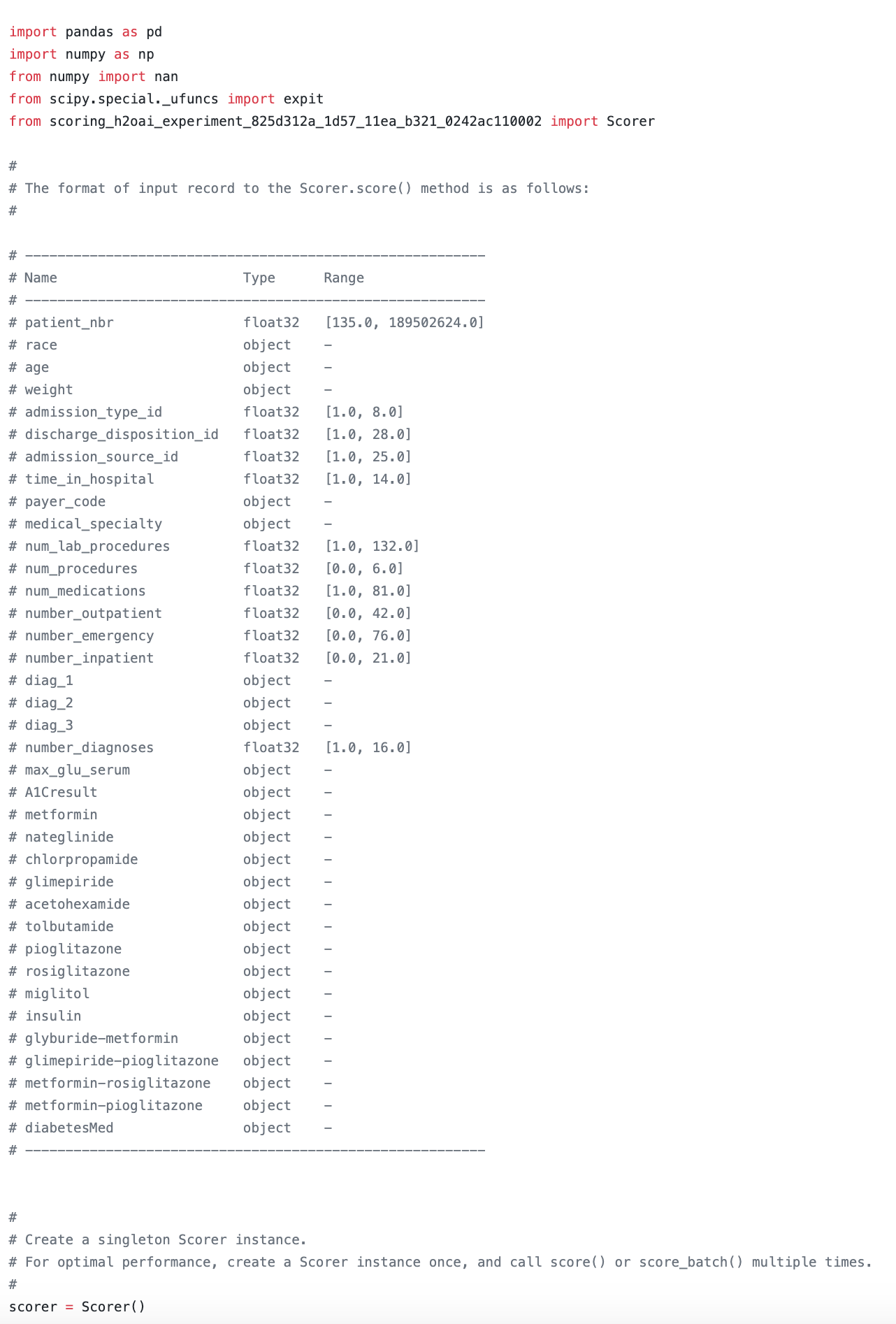

Well, that’s the whole point of AI doing AI. All the feature transformations and winning algorithms and parameters that Driverless AI discovered are actually written as compiled code by Driverless AI. I downloaded the scorer.zip from the UI by clicking on the “DOWNLOAD PYTHON SCORING PIPELINE” button. The scorer.zip has the code for scoring — both the feature generation and model scoring happens inside a python scoring .whl file, so you don’t need to worry about the complicated stuff.

You can now score any new data with the AI/ML model by just invoking the scoring pipeline in your application — simply import and refer to the python scoring .whl file, generated by H2O Driverless AI.

Scoring the future data

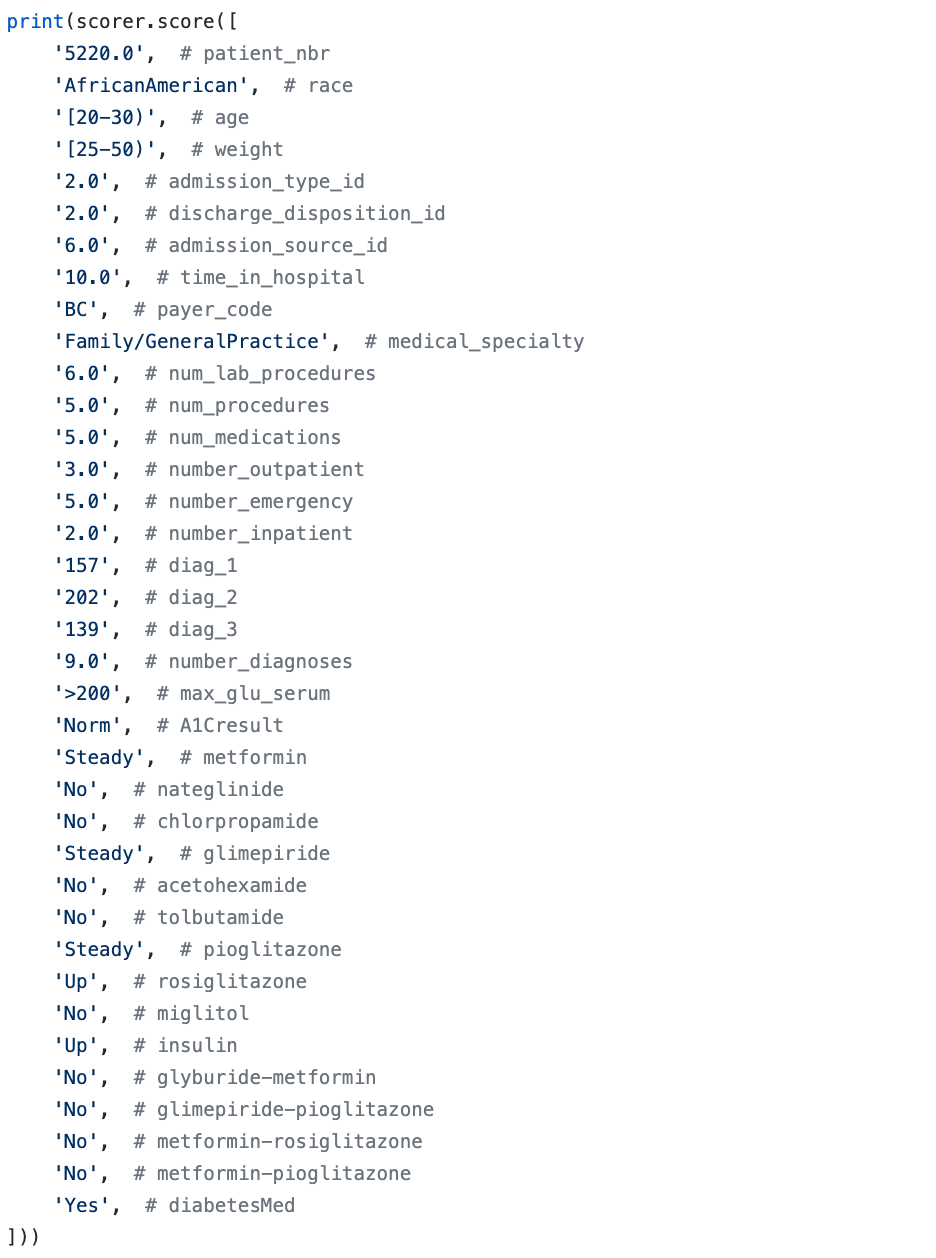

Sample python code that tries to find out if the patient is going to be readmitted in the hospital or not, given some fresh data. This example.py is also provided by H2O Driverless AI for you in the scoring package, so it’s easy to cut/paste/modify.

Output:

readmitted. <= 30, readmitted.>30, readmitted.NO

0.11, 0.08, 0.802

It sounds like the likelihood of ‘NO readmission’ for this patient, given the higher probability of that class readmitted.NO .

FYI — Driverless AI can also create Java and C++ runtime pipelines also known as MOJO. Here’s the link for more details: http://docs.h2o.ai/driverless-ai/latest-stable/docs/userguide/python-mojo-pipelines.html

Java and C++ Scoring times can be in millisecond territory and you can even score the patients or whatever data realtime. Clicking the “BUILD MOJO SCORING PIPELINE” and once finished, download the Java, C++, or R mojo scoring artifacts with examples/runtime libs.

Conclusion

In this blog post, we saw how we are able to automate and create production pipeline AI/ML model code from the Data with minimal # of clicks and default choices. The model code has both feature engineering and model scoring embedded in it. The model can be consumed by applications that can just send the data to the compiled scoring code and get the results back and use it as part of the workflow.