Using AI to unearth the unconscious bias in job descriptions

“Diversity is the collective strength of any successful organization

Unconscious Bias in Job Descriptions

Unconscious bias is a term that affects us all in one way or the other. It is defined as the prejudice or unsupported judgments in favor of or against one thing, person, or group as compared to another, in a way that is usually considered unfair . One of the most prominent examples of unconscious bias is observed in the hiring process adopted by companies. Often, the job bulletins containing elements that favor a particular gender, race, or group. Biased job descriptions not only limit the candidate pool but also diversity in the workplace. Therefore, the companies need to check out any biases in the job descriptions and eliminate them to create a healthy and fair culture in an organization.

How can Artificial Intelligence help?

Machine learning and Artificial Intelligence technologies have made it possible to analyze data from various sources accurately and precisely. Whether it is structured or unstructured data , AI-backed technologies can provide superior results compared to manual processing. However, it is also essential to keep in mind that the end-users of these tools will not always be people with a background in software engineering or machine learning. To make such tools mainstream, it is crucial to make them more accessible. Hence, It’ll be a great idea if these AI applications are infused into a platform that enables business users to directly interact with data without a lot of hassle and complexities. Well, H2O Wave has been created to precisely address this very issue, and the following case study will demonstrate that.

What is H2O Wave?

H2O Wave is an open-source Python development framework that makes it fast and easy for data scientists, machine learning engineers, and software developers to develop real-time interactive AI apps with sophisticated visualizations. One can create interactive and visual AI applications with just Python. HTML, CSS, and Javascript skills aren’t required. The wave apps run natively on Linux, Mac, Windows, or any OS where Python is supported.

How to get started

We just launched our 14-day free trial of H2O AI Cloud earlier this week. You can get hands-on experience with our demo Wave apps and our award-winning machine learning platform H2O Driverless AI . Everything is pre-installed, so all you need is a web browser. Sign up for your free trial here . Please use your corporate email address for immediate access. For more information about the free trial, check out this blog post .

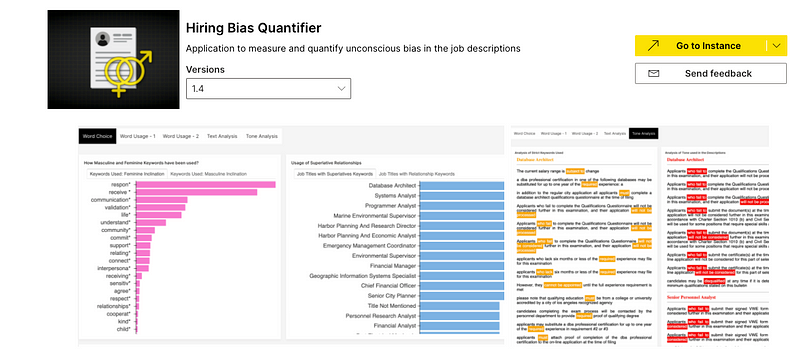

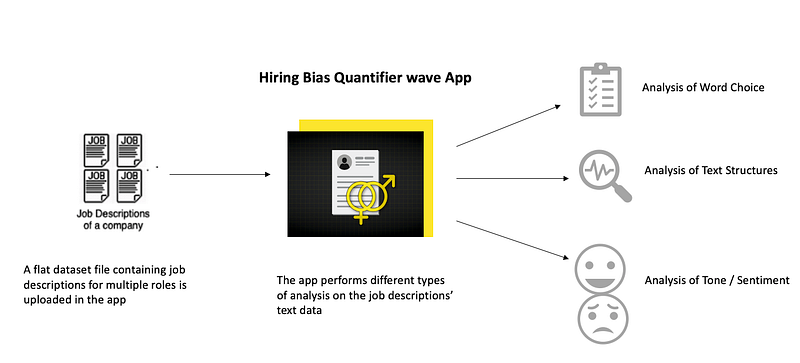

In this article, we create a hiring bias quantifier using the H2O wave toolkit and use it to analyze the job descriptions for potential bias. The target audience for this app would include Business Analysts, Data Scientists, Human Resource personnel.

Hiring Bias Quantifier

The Hiring Bias Quantifier uses text and statistical analysis to measure and quantify unconscious bias in job descriptions. This application summarizes different types of analysis that measure, quantify and analyze unconscious bias — specifically gender bias in the job descriptions. It then produces several valuable insights relevant to understanding how much bias exists in the job descriptions. It also compares it with a benchmark.

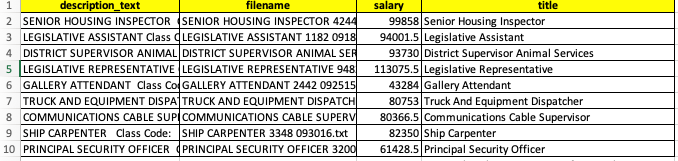

Let’s now see how this app can help us detect unconscious bias in a dataset containing job descriptions. In this article, we shall be working with a preprocessed version of the Los Angeles Job Description dataset from Kaggle , which includes the following attributes:

Our job is to detect whether the text in thedescription_text column contains unconscious bias or not.

Methodology

This dataset is first loaded into the app. The ‘Hiring Bias Quantifier’ consists of several machine learning algorithms and models that analyze different parts of the job description text and perform analysis based on the word choices, text structure, tone, sentiment, etc. The app then generates a detailed report containing the multiple insights and findings compiled into a dashboard. Here are some of the detailed results:

1. Analysis of word choice in the job descriptions

Unconscious Bias in job descriptions towards a specific gender can limit the candidate pool and diversity. The figure below shows some of how the choice of particular words can lead to bias.

Let’s see some of the insights presented by the app :

- Use of Gendered Keywords in Job Descriptions

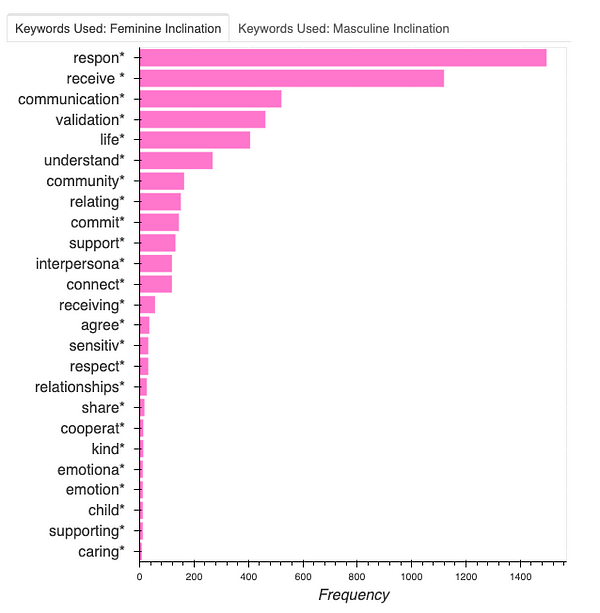

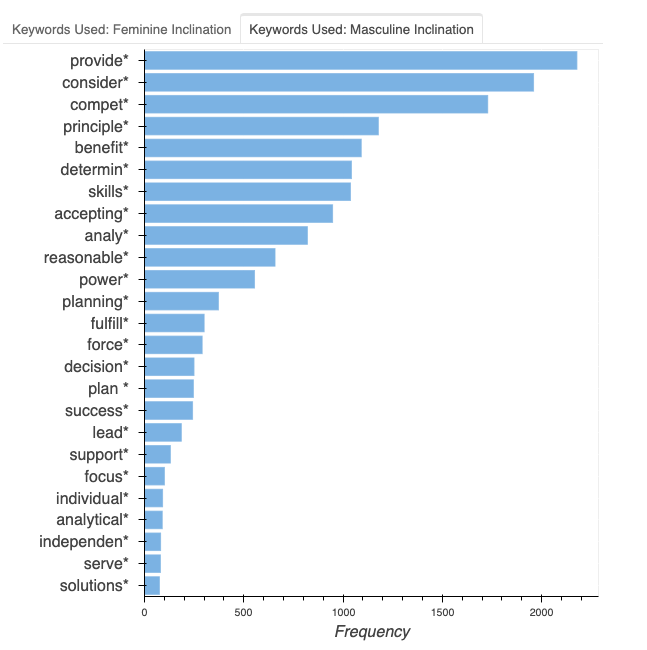

Using gender-specific words in the job description can isolate a specific gender from applying to certain jobs. It has been observed that words that are more “aggressive,” “assertiv e,” or “independent ” typically put off women from applying to specific roles. The plots below show the male and female-specific keywords identified in the dataset.

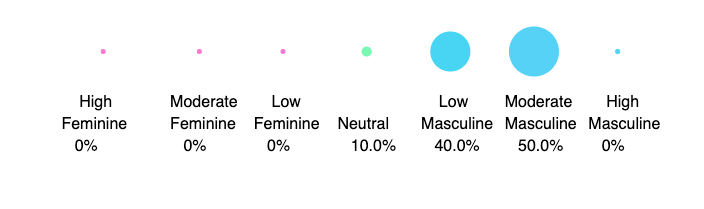

- Distribution of Masculine-Feminine Nature of the Descriptions

For the current pool of job descriptions, analysis shows that the nature of descriptions is more geared towards males.

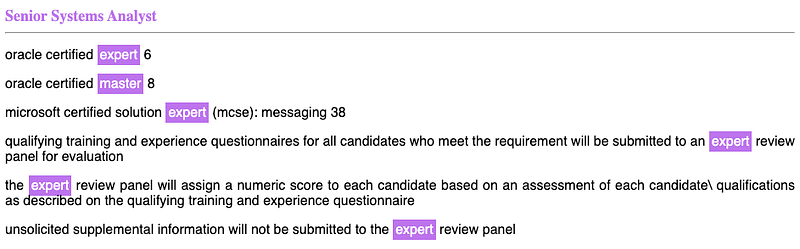

- Higher use of Superlatives

The following example shows certain job descriptions where highly superlative keywords, specifically “Master ” and “Expert, ” are used. These keywords have a powerful masculine tone and hence show a preference towards a particular gender.

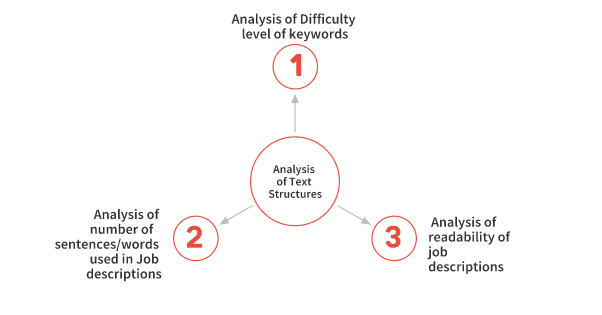

2. Analysis of Text Structures

Several studies (LinkedIn , Forbes , and Glassdoor ) have suggested that both the quality and the quantity of an applicant’s pool can be significantly influenced by how a job description is written.

“A well-written, complete, and insightful job description can result in attracting some of the top and diverse talents for the role.” On the other hand, a description lacking vital features (for example — an optimal word limit, choice of the words, language used, overall tone) may attract fewer candidates.

The text structure of a job description can be analyzed in several ways, namely :

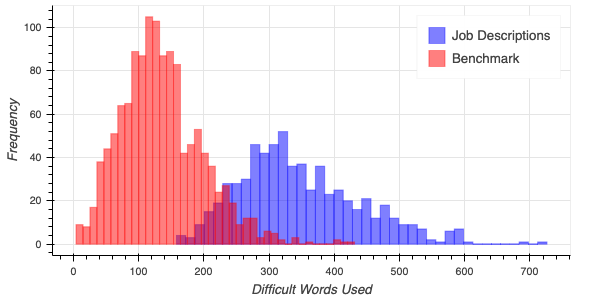

- Analysis of Difficulty level of keywords in job descriptions

Since readability is of paramount importance, it is a good idea to focus and work on the words that are difficult to read for people. The graph below shows the high usage of difficult words in the descriptions of various jobs.

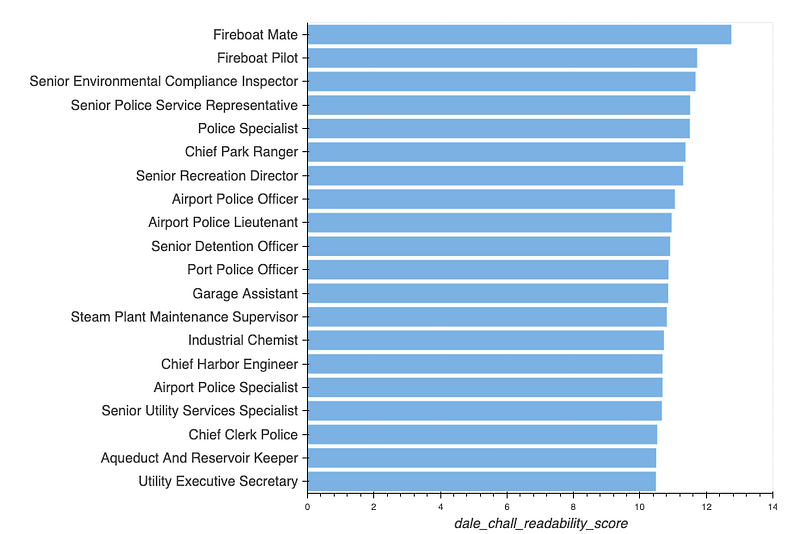

- Analysis of difficulty level of keywords

Similarly, companies should refrain from making job descriptions too complex or challenging to read. For instance, on analysis, it was found that the job descriptions for the following posts were the least readable.

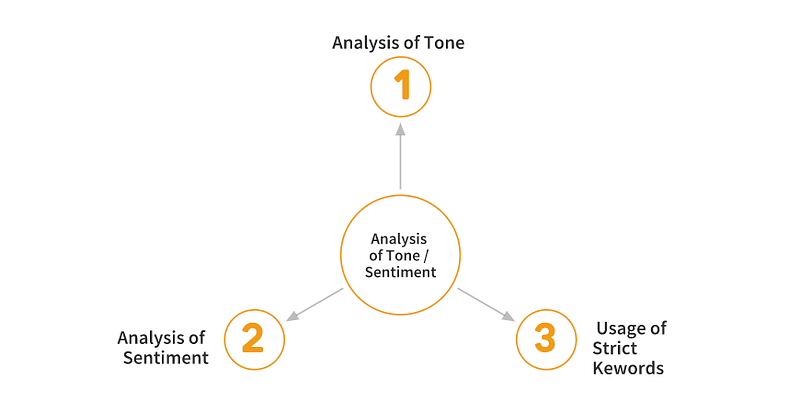

3. Analysis of Tone / Sentiment

Sentiment analysis is a sub-field of Natural Language Processing (NLP) that identifies and extracts opinions from a given text. Sentiment analysis of the job descriptions can help the companies to gauge their tone and overall sentiment. The sentiments or the tone conveyed in the job descriptions should not be too negative or demanding, resulting in fewer people applying for the job.

Use of sentences and words that convey moderate or high negative sentiments should be avoided. The following plot shows the distribution of the negative sentiments in the job descriptions.

- The tone of Job Descriptions

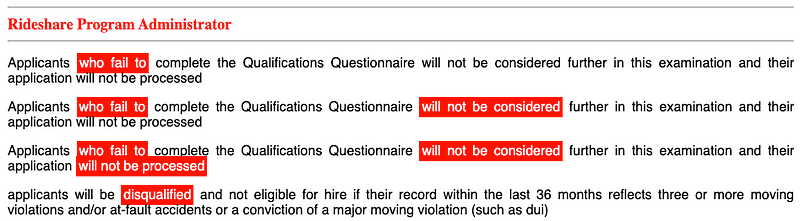

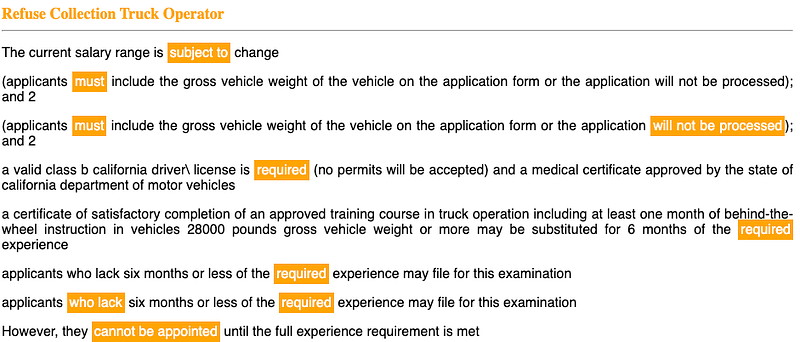

Companies must ensure that the tone of the job descriptions shouldn’t be too negative. For instance, keywords containing negative sentiments that have been automatically highlighted below should be avoided.

- Use of Strict Keywords in Job Descriptions

Again, excessive use of demanding keywords in nature is also not desirable in a job description. Phrases such as “who fail,” “will not be considered,” “must-have, ” etc., should be avoided or replaced with positive and encouraging words like “good to have,” “add-on,” etc.

Conclusion

The idea of job descriptions is to encourage a wider group of people to apply. Thus, the choice of language and words in job descriptions plays a crucial role in promoting applicant diversity. Hence, factors like content, tone, language, and format can directly or indirectly influence a company’s hiring process.